Alien Dreams: An Emerging Art Scene

By Charlie Snell In recent months there has been a bit of an explosion in the AI generated art scene. Ever since OpenAI released the weights and code for their CLIP model, various hackers, artists, researchers, and deep learning enthusiasts have figured out how to utilize CLIP as a an effective “natural language steering wheel” for various generative models, allowing artists to create all sorts of interesting visual art merely by inputting some text – a caption, a poem, a lyric, a word – to one of these models.

I am currently experimenting with AI to create visuals for art covers and videos for my music.

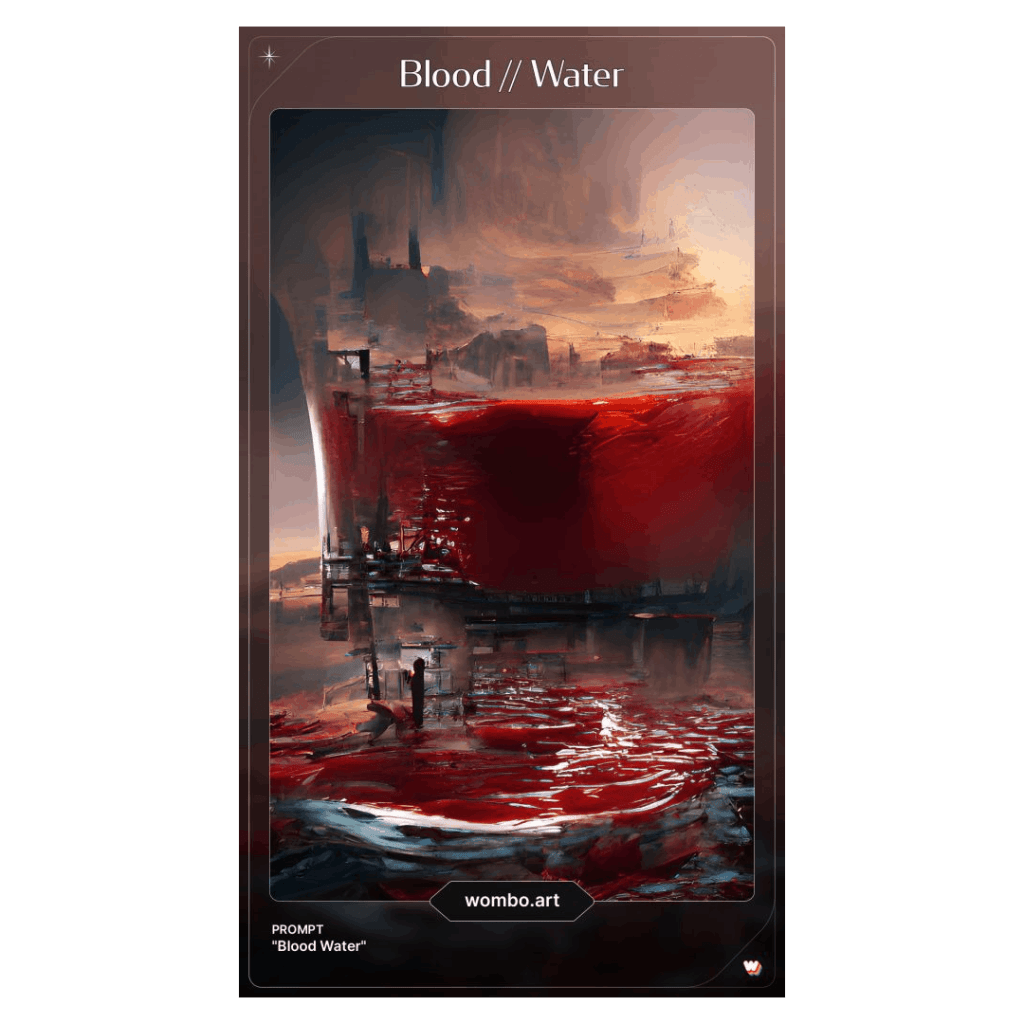

Wombo Dream

My first approach was to use Wombo Dream. It is really easy to use. Just enter some keywords and select an art style, then the magic will happen. Wombo creates beautiful artworks using the power of AI!

Wombo Dream has limitations. There are no options to change the format or resolution of the card. Furthermore it is not possible to generate videos.

While researching for better options I found Artbreeder.

Artbreeder

The Artbreeder application is adept at mixing any two images and making an artwork out of it. Naturally, since the application is AI-based, you get results automatically and within a few seconds only. The Artbreeder app also uses various models to get the best pictures possible. Models for anime images, datasets, videos, paintings, and more are included in the overall system of the app. Overall, the Artbreeder platform is an automatic art generator and a place for a community of people to share art. Composing includes users inputting the various things they want in the photo, whereas animate takes two pictures and animates them. I found the approach of Artbreeder not quite suitable for my needs because I think it is much easier to enter keywords and get an artwork as the result.

While researching for better options I found the AI model called VQGAN, which is highly configurable.

VQGAN

With Google Colab — an online programming environment it is possible to run python code which will trigger the VQGAN AI model. Colab is a free notebook environment offered by Google that runs entirely in the cloud. Most importantly, it does not require a setup and the notebooks created can be easily interchanged between users.

About the author

Henri Werner is an 24 year old composer and producer of electronic music with 7M streams on Spotify and 20M views on YouTube.

In October 2020 Henri has been awarded the Degree of Master of Music having followed an approved programme in electronic music composition at the University of West London.

Henri curates his own Spotify Playlist “Future Music” with 7k followers and creates music videos for his own Youtube channel. Currently he is eager to learn more about AI for video and cover art creation. Stay tuned!

0 Replies to “How I use AI for my music videos and visuals”